SAFE: Video Challenge

👉 All participants are required to register for the competition by filling out this Google Form

📊 Overview • 🥇 Detailed Leaderboard • 🏆 Prize • 📢 Results Sharing • 📝 Tasks • 🤖 Model Submission • 📂 Create Model Repo • 🔘 Submit • 🆘 Helpful Stuff • 🗂 Training Data • 🔍 Evaluation • ⚖️ Rules

📣 Updates

2025-10-10

- Competition tasks are closed. Thanks to all the participants.

- Private Leaderboard is now visible for every task: SAFE Video Challenge Collection

- Competition Results will be presented at the APAI @ ICCV 2025 October 19th.

- Look out for our paper with competition details.

2025-10-09

- Competitions Close at 23:59 October 10th UTC. The task spaces will not accept any more submissions after that.

- Final Rankings. Final rankings will be w.r.t. to balanced accuracy on the private set. Remember the private set is a superset of the public. It contains examples from additional real and synthetic sources not present in the public set. Results on the private set will be shared after the competition closes.

- (Optional) Select Your Submissions For Private Leaderboard. You are allowed to select upto 2 submissions for the private leaderboard. If you do not make any selections then your submission with the highest public score will be chosen. If you do make any selections then the submission with the highest private score (out of the 2 selected) will be used for the private leaderboard. You can do this by checking the “selected” box next to a submission from the “My Submissions” section for each task space on huggingface. For example, this allows you to choose an additional submission with a lower public score for the private leaderboard. In this competition, the private set is a superset of the public, so we don’t expect this option to make much difference but we wanted to clarify it just in case.

2025-09-18

- Task 2 - Detection of Synthetic Video Content after post-processing is now open. SAFE Video Challenge Collection

- This task will measure impact of common post-processing techniques on model performance.

- In this task, we apply several common post-processing techniques (resizing, compression, etc.) to a subset of Task 1 data.

- Please submit your best model from Task 1 to this task so that we can have a meaningful comparison.

- We’ll update the detailed leaderboard to show model performance conditioned on each (anonymized) post-processing operation.

2025-08-22

- Automatic Submission Log Summaries.It can be accessed under “My submission” -> “Admin Comment” in each task space.

- Upgraded Compute to have 2x CPU & GPU memory: Nvidia 1xL40S, 8 vCPU, 62 GB RAM. 48 GB VRAM .

- Updated Sample Submission. Some of the videos can get large (4k and 60fps): a few tips to better manage memory in the updated submission repo: (1) moving to GPU after every frame, (2) checking memory and (3) center cropping. See sample repo

2025-08-04

- Detailed Leaderboard is Live. It shows detailed performance: TPR, TNR and balanced accuracy conditioned on anonymized source.

2025-07-30

- Added pointers to some publicly available training datasets. While we do not provide training data, you are free to use anything you want for training.

- The challenge duration is extended until the workshop (Oct 19th). We’ll announce a cut-off date (early October) at which the leading teams will be determined.

- There will also be a remote option to participate in the competition session at the APAI workshop.

2025-07-01

- Pilot task is live SAFE Video Challenge Collection

2025-07-18

- Task 1 is live SAFE Video Challenge Collection

📊 Overview

ULRI’s Digital Safety Research Institute is excited to announce the SAFE: Synthetic Video Detection Challenge at the Authenticity and Provenance in the Age of Generative AI (APAI) workshop at ICCV 2025.

The SAFE: Synthetic Video Detection Challenge will drive innovation in detecting and attributing synthetic and manipulated video content. It will focus on several critical dimensions of synthetic video detection performance, including generalizability across diverse visual domains, robustness against evolving generative video techniques, and scalability for real-world deployment. As generative video technologies advance rapidly—with increasing accessibility and sophistication of image-to-video, text-to-video, and adversarially optimized pipelines—the need for effective and reliable solutions to authenticate video content has become urgent. We aim to mobilize the research community to address this need and strengthen global efforts in media integrity and trust.

All participants are required to register for the competition

- Sign up here to participate and receive updates: Google Form

- For info please contact: SafeChallenge2025@gmail.com

- See instructions on how to submit and 🆘 Helpful Stuff, debug example, open issues for reference or join our discord server

- Tasks will be hosted on in SAFE Video Challenge Collection on Huggingface Hub 🤗

❗Important: To ensure the challenge emphasizes generalizable detection methods, approaches that rely on analyzing metadata, file format, etc. are discouraged and ineligible for participation.

📅 Schedule

- Pilot task opens July 1.

- Tasks 1 opens in mid-July

- Additional tasks may open later

- Competiton tasks will remain open until the APAI workshop at ICCV October 19th.

🥇 Winners

UL Research Institutes’ Digital Safety Research Institute has concluded the 2025 SemaFor SAFE: Synthetic Video Detection Challenge, advancing the science of detecting AI-generated video content. Algorithms, Results, and Winner presented at the at the “Authenticity and Provenance in the Age of Generative AI Workshop”, International Conference on Computer Vision

🥇 1st Place : Team GRIP-UNINA: PI Davide Cozzolino, Image Processing Research Group, University Federico II of Naples, Italy

🥇 2nd Place : Teams DASH: PI Simon Woo, DASH Lab, Sungkyunkwan University, South Korea

🥇 3rd Place : Team ISPLynx: Paolo Bestagini PI, Image and Sound Processing Lab (ISPL), Politecnico Milano

🥇 Detailed Leaderboard

https://safe-challenge-video-challenge-leaderboard.hf.space

🏆 Prize

The most promising solutions may be eligible for research grants to further advance their development. A travel stipend will be available to the highest-performing teams to support attendance at the APAI workshop at ICCV 2025, where teams can showcase their technical approach and results. Remote participation options will also be available.

📢 Results Sharing

In addition to leaderboard rankings and technical evaluations, participants will have the opportunity to share insights, methodologies, and lessons learned through an optional session at the APAI Workshop at ICCV 2025. Participants will be invited to present at the workshop, showcasing their approach and findings to fellow researchers, practitioners, and attendees. To facilitate this engagement, we will collect 250-word abstracts in advance. These abstracts should briefly describe your method, key innovations, and any noteworthy performance observations. Submission details and deadlines will be announced on the challenge website. This is a valuable opportunity to contribute to community knowledge, exchange ideas, and build collaborations around advancing synthetic video detection.

🧠 Challenge Tasks

The SAFE: Synthetic Video Challenge at APAI @ ICCV 2025 will consist of several tasks. This competition will be fully blind. No data will be released. Participants will need submit their models on our huggingface space. Only a small sample dataset will be available for debugging purposes. You are free to use anything you want for training your models. We provide some pointers to publically avaialble datasets. Each team will have a limited number of submissions per day. If your submission fails due an error, you can reach out to us and we can help debug and reset this limit. (discord server, SafeChallenge2025@gmail.com )

All tasks will be hosted in our SAFE Video Challenge Collection on Huggingface Hub 🤗.

❗Important: submissions that work based on analyzing metadata, file format, etc. are not eligible.

🚀 Pilot Task (✅ Open): Detection of Synthetic Video Content

- The objective is to detect synthetically generated video clips.

- Your model must submit a binary decision for every example.

- The submissions will be ranked by balanced accuracy.

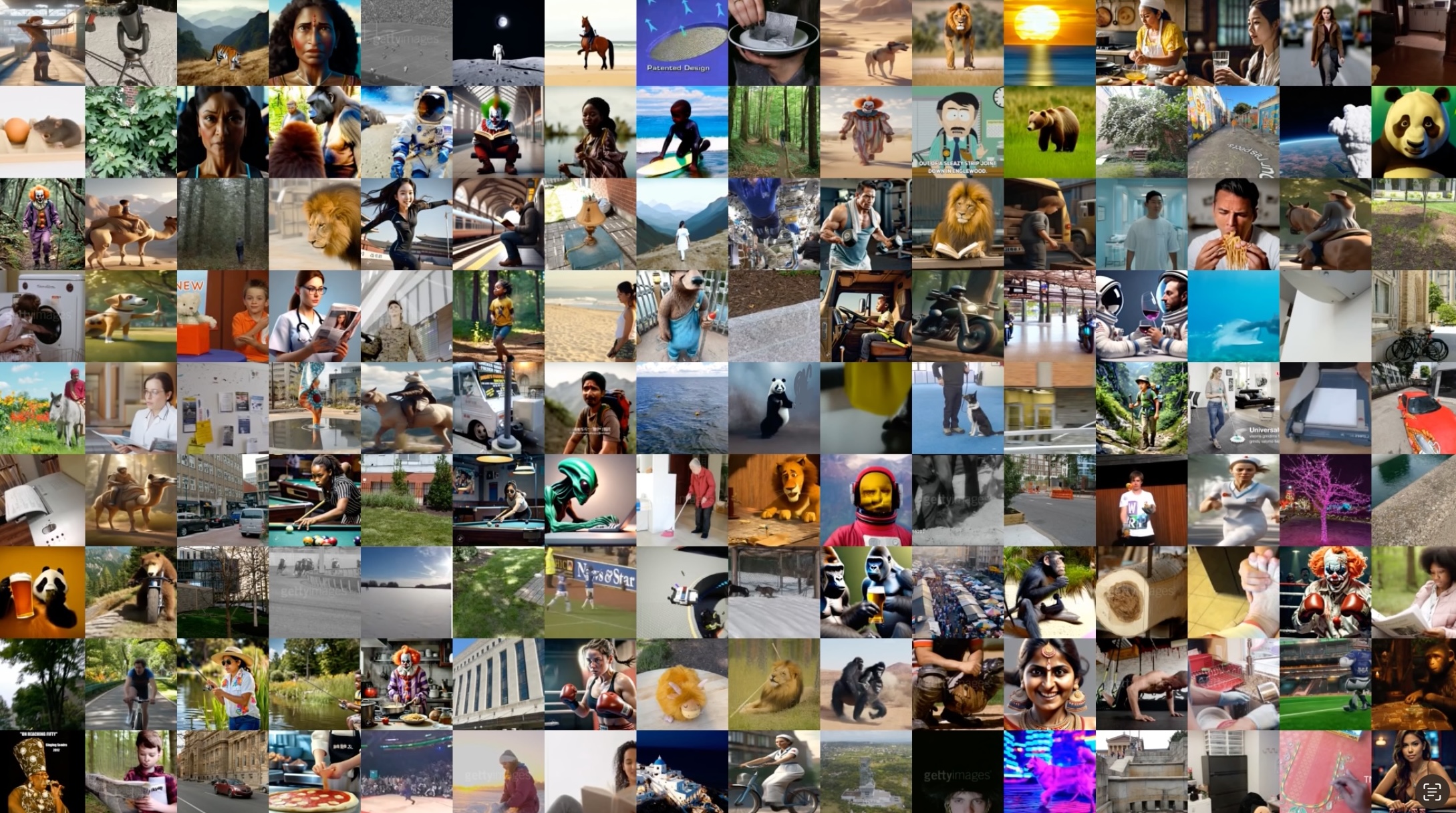

- The data consists of real and synthetic videos. The latter are generated with serveral older generative models. The former (reals) will be pulled from various sources. The videos will cover a wide range of content such as natural scenes, human activities, animals, etc. There is no audio in the videos. Please see the representaive grid below.

- The video data will consist of various common compression formats, varying resolution, bitrate, FPS and length. The length of each clip will vary averaging around 5 seconds but no longer than 60 seconds. The resolution of the video will also vary but will not exceed 2000 x 2000 pixels.

- This purpose of the pilot task is to allows participants to test initial submission logistics, to support early experimentation and understand task dynamics before later tasks open.

- For this task, the data will be randomly divided into a private and public split.

- Submit here: https://huggingface.co/spaces/safe-challenge/VideoChallengePilot

🎯 Task 1 (✅ Open): Detection of Synthetic Video Content

- The objective is to detect synthetically generated video clips.

- The task is similar in structure to the pilot task, but it contains newer generative models of higher quality and realism. The real videos also cover a wider range of content and sources.

- The synthetic videos are generated by a range of state-of-the-art techniques, including image/text-to-video and other generative video models.

- The real videos are pulled from various sources and cover a wide range of content such as natural scenes, human activities, animals, etc.

- There is no audio in the videos.

- The video data will consist of various common compression formats, varying resolution, bitrate, FPS and length. The length of each clip will vary averaging around 5-10 seconds. The resolution of the video will also vary but will not exceed 2000 x 2000 pixels.

- The focus will be on generalization to unseen generators, robustness to visual variability, and applicability to real-world forensics workflows.

- For this task, the public set will consist of a subset of generator models and sources. The private set will include additional model and sources not present in the public set. Performance breakdown by source/model will be available in the detailed leaderboard.

- Submit here: https://huggingface.co/spaces/safe-challenge/VideoChallengeTask1

🎯 Task 2 (✅ Open): Detection of Synthetic Video Content after Post-processing

- The objective is to detect synthetically generated video clips.

- The task is similar in structure to the Task 1, but the videos are post-processed with several methods.

- Post-processing includes common operations seen on the internet such as compression, resizing, cropping and others.

- The videos come from the same models and sources as in Task 1.

- Please submit your bset model from Task 1 to this task so that we can measure how much post-processing impact model performance.

- Submit here: https://huggingface.co/spaces/safe-challenge/VideoChallengeTask2

🔮 Additional tasks will be announced leading up to ICCV 2025. These may explore areas such as manipulation detection, attribution of generative models, laundering detection, or characterization of generative content. Stay tuned for updates on new challenge tracks and associated datasets.

🤖 Model Submission

This is a script based competetion. No data will be released before the competition. A subset of the data may be released after the competition. Competition will be hosted on Huggingface Hub. There will be a limit to number of submissions per day.

📂 Create Model Repo

Participants will be required to submit their model to be evaluated on the dataset by creating a huggingface model repository. Please use the example model repo as a template.

- The model that you submit will remain private. No one including the challenge organizers will have access to the model repo unless you decide to make the repo public.

- The dataset will be automatically downloaded to

/tmp/datainside the container during the evaluation run. See example model on how to load it. - The model will be expected to read in the dataset and output a file containing a id, binary decision, detection score, for every input example.

- The only requirement is to have a

script.pyin the top level of the repo that saves asubmission.csvfile with the following columns. See sample practice submission file.id: id of the example, strigpred: binary decision, string, “generated” or “real”score: decision score such as log likelihood score. Postive scores correspond to generated and negative to real. (Used to computing the AUC)

- All submissions will be evaluated using the same resources: NVIDIA

L4GPU instance. It has 8vCPUs, 30GB RAM, 24GB VRAM. - All submissions will be evaluated using the same container based nvidia/cuda:12.6.2-cudnn-runtime-ubuntu24.04 image

- Default

requirments.txtfile will be installed in the eval environment - You can optionally provide a custom

requirements.txtjust include it in the top level of your submission repo (see the example model repo). Make sure to includedatasetsto read the dataset file. - During evalation, container will not have access to the internet. Participants should include all other required dependencies in the model repo.

- 💡 Remember: you can add anything to your model repo like models, python packages, etc.

- Default

- We encourage everyone to test your models locally using a debug dataset. See debug example

🔘 Submit

Once your model is ready, it’s time to submit:

- Go the task submision space (there is a seperate space for every task)

- Login with your Huggingface Credentials

- Teams consisting of multiple individuals should plan to submit under one Huggingface account to facilitate review and analysis results and use the same team name

- Enter the the repo of your model e.g.

safe-challenge/safe-example-submissionand click submit! 🎉 - You can check the status of your submission under

My Submissionspage. - If the status is failed, you can debug locally or reach out to us via email or on our discord server. Just include the task and submission id. We are happy to debug.

🆘 How to get help

We provide an example model submission repo and a local debug example:

- Take a look at an example model repo: https://huggingface.co/safe-challenge/safe-video-example-submission

- To reproduce all the steps in the submission locally, take a look at the debugging example: debug example

- You won’t be able to see any detailed error if your submission fails since it’s run in a private space. Just reach out to us via email or on our discord server and we can look up the logs. The easiest way is to trouble shoot locally using the above example.

🗂️ Training data

We are not providing any training data so feel free to use anything you want to train your models. Here are few pointers to existing datasets:

- Deepfake Detection Challenge https://www.kaggle.com/c/deepfake-detection-challenge/data

- GenVideo https://github.com/chenhaoxing/DeMamba?tab=readme-ov-file

- DeepAction Dataset https://huggingface.co/datasets/faridlab/deepaction_v1

- deeper forensics https://github.com/EndlessSora/DeeperForensics-1.0

- ExDDV https://github.com/vladhondru25/ExDDV?tab=readme-ov-file

- VANE-bench https://huggingface.co/datasets/rohit901/VANE-Bench

- Synth Vid Detect https://huggingface.co/datasets/ductai199x/synth-vid-detect

🔍 Evaluation

All submissions will be ranked by balanced accuracy. Balanced accuracy is defined as an average of true positive rate and true negative rate.

- The competition page will maintain a public leaderboard and a private leaderboard. The data will be devided differently between public and public depending on the task.

- The leaderboards will also show true positive rate, true negative rate, AUC and fail rate.

- A detailed public leaderboard will also show error rates for every source, and perhaps additiona breakdowns. However, the specific source name will be anonymized.

⚖️ Rules

To ensure a fair and rigorous evaluation process for the Synthetic and AI Forensic Evaluations (SAFE) - Synthetic Video Challenge Registration, the following rules must be adhered to by all participants:

- Leaderboard:

- The competition will maintain both a public and a private leaderboard.

- The public leaderboard will show error rates for each anonymized source.

- The private leaderboard will be used for the final evaluation and will include non-overlapping data from the public leaderboard.

- Submission Limits:

- Participants will be limited in submissions per day.

- Confidentiality:

- Participants agree not to publicly compare their results with those of other participants until the other participant’s results are published outside of the conference venue.

- Participants are free to use and publish their own results independently.

- Compliance:

- Participants must comply with all rules and guidelines provided by the organizers.

- Failure to comply with the rules may result in disqualification from the competition and exclusion from future evaluations.

By participating in the SAFE challenge, you agree to adhere to these evaluation rules and contribute to the collaborative effort to advance the field of video forensics.